The Stack Part 2: Automating Deployments via CI

In the last post we created our Control Tower structure with all of our AWS Accounts in it. In this post we will be automating our deployment process for each of these environments. See the full overview of posts here.

At the end of this post we will have:

- A workflow for bootstrapping our AWS Accounts for CDK (see here).

- A workflow for deploying our CDK stacks, including synthesizing and testing before (see here).

- Set up automatic staggered deployments when changes are merged to our

mainbranch. - And fallback to manual deployments if we need to.

If you want to jump straight to the code, you can find it in the GitHub repository which links directly to the Part 2 branch.

Otherwise, let's jump in!

AWS: Seting up Credentials

We will need to set up credentials for our GitHub Actions to be able to deploy to our AWS Accounts. For now, we will focus on the following of our accounts as deployment targets:

- Integration Test

- Production Single-tenant

- Production Multi-tenant

For each of these we will need to set up IAM credentials that we can use in GitHub Actions to deploy our resources into each of these accounts.

Let's get set up. First we'll define a group for the user to go into, create the user, and then create the access keys for the user:

- Go to the AWS Console -> IAM.

- Go into User groups.

- Create a new group called

ci-github-deployment. - Give it the

AdministratorAccesspolicy for now. - Go into Users.

- Create a new user called

ci-github-deploymentwithout console access. - Add it to the

ci-github-deploymentgroup.

Finally, we need to create the access keys for the user:

- Go into the newly created user.

- Go into Security credentials and create a new access key.

- Choose Command Line Interface (CLI) and click the checkbox to confirm.

- Set the Description tag value to

Automatic deployments from GitHub Actionsor something appropriate. - Note down your Access and Secret Key somewhere safe (we'll need it later)

Repeat this process for Integration Test, Production Single-tenant, and Production Multi-tenant.

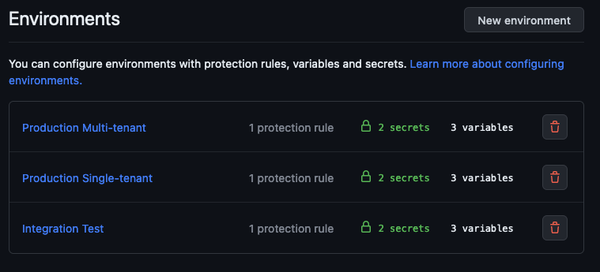

GitHub: Setting up Environments

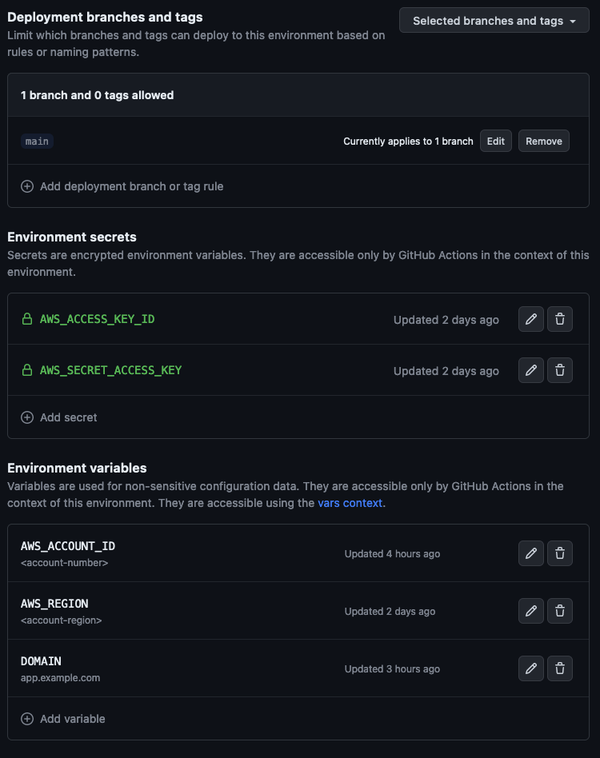

For our GitHub Actions workflows to work, we need to set up our Environments configure a couple of Environment variables and secrets.

- Go to your repository Settings -> Environments

- Create your environments e.g.

Integration Test - [Recommended] Restrict deployments to the

mainbranch - Set up the secrets for

AWS_ACCESS_KEY_IDAWS_SECRET_ACCESS_KEY

- Set up the variables for

AWS_ACCOUNT_IDAWS_REGIONDOMAIN(where your app will live, e.g.app.example.com.integration.example.com, andsingle.example.com)

Repeat those steps with the relevant values for each of the environments Integration Test, Production Single, Production Multi.

Your Environment overview will look like this:

And each environment will roughly look like this:

CDK: Infrastructure as Code

CDK is our tool of choice for Infrastructure as Code. We'll start from the default template and adjust it to use Bun which simplifies the process of running CDK commands while using TypeScript.

Instead of setting this up from scratch, start from the template for this step in the GitHub repository. We'll go through what this contains in the next two sections.

Our overall aim is to structure our CDK into three main groupings:

Global: "Global" (oftenus-east-1) specific things such as ACM Certificates for CloudFront, and we'll also put Hosted Zones hereCloud: Region specific infrequently changing things such as VPC, Region-specific Certificates, etcPlatform: DynamoDB, Cache, SQSServices: Lambdas, API Gateway, etc

This split is based on the frequency something changes, and allows us to deploy freqeuently changing stacks without having to also look at things that very rarely change. In this part of the series we will set up the Global stack.

As you can see in the GitHub repository, we structure our CDK stack as follows:

deployment/: The root folder for all things CDKbin/: The entry point for all our stacksdeployment.ts: Our "executable" that CDK run run (defined incdk.json)helpers.ts: Helper functions to make our CDK code more readable and safe to run

lib/: The actual logic of our CDK stacksglobal/: Our 'Global' layer containing all the resources that are shared across all stacksstack.ts: Gathers all of our 'Global' stacks into one stackdomain.ts: Sets up our Hosted Zone and ACM certificates

This might seem like overkill right now, but will benefit us quite quickly as we start adding more stacks to our project.

In deployment.ts you'll see the root of our CDK stack. This is where we will define the three layers we mentioned earlier, Global, Cloud, Platform, and Services. In CDK terminilogy these are called Stacks.

For now, we will only define the Global layer:

1 // ...imports

2 ;

3

4 /**

5 * Define our 'Global' stack that provisions the infrastructure for our application, such

6 * as domain names, certificates, and other resources that are shared across all regions.

7 *

8 * ```bash

9 * bun run cdk deploy --concurrency 6 'Global/**'

10 * ```

11 */

12 ;

13 if app, globalStackName 14 15 16 17 18 19 20 21 22 23

We've set up some conveniences to easily run a single stack, via matchesStack, and to validate our environment variables, via validateEnv.

Our GlobalStack is then defined in lib/global/stack.ts, and more or less just pieces together the types and the sub-stacks in the global/ directory.

The interesting bit here is the call to new domain.Stack which is what actually kicks off the provisioning of resources, which are defined inside the lib/global/domain.ts file on layer deeper:

1 // ...imports

2

3

4 5 6 7 8 9 10 11

And finally we get to the interesting part of it all in lib/global/domain.ts. This is the first place we are actually defining resources that will be deployed to AWS, by calling the CDK Constructs that are available to us. Construct is the CDK terminology for the actual resources we create, i.e. our building blocks.

We create our Hosted Zone via new route53.HostedZone and our ACM certificate via new acm.Certificate. You can find out more about each of these in the CDK docs:

Let's get our resources defined:

1 // ...imports

2 3 4 5 6 7

8

9 /**

10 * Set up a Hosted Zone to manage our domain names.

11 *

12 * https://docs.aws.amazon.com/cdk/api/v2/docs/aws-cdk-lib.aws_route53.HostedZone.html

13 */

14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30

This sets up a Hosted Zone and an ACM certificate for our domain, and configures it to validate the Certificate via DNS validation.

Automated Deployments via GitHub Actions

As you can see in the GitHub repository, we have two workflows to deploy things. They share much of the same logic, so let's focus on the commonalities first.

Both of them do a few things:

- Sets up a trigger on

workflow_dispatchso that we can manually trigger the workflow. - Set up a matrix of environments to deploy to.

- Configures and

environmentand aconcurrencygroup. Theconcurrencygroup is important, since we don't want to deploy to the same environment at the same time. - Installs

bunand our dependencies.

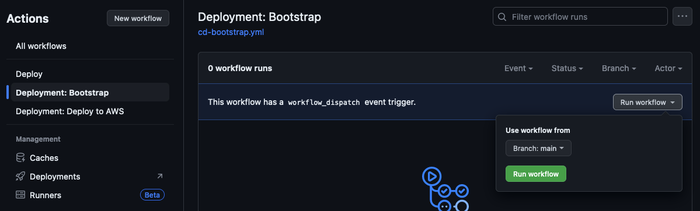

The GitHub Actions YAML might feel a bit verbose, so let's break it down a bit. We'll first focus on cd-bootstrap which Bootstraps AWS Accounts for CDK.

Boostrap Workflow

We first define our name and the trigger. Because we only want this to be triggered manually (bootstrapping just needs to run once) we can use workflow_dispatch which allows us to trigger it from the GitHub Actions UI (docs here):

1 name: "Deployment: Bootstrap"

2

3 on:

4 workflow_dispatch:

5 # ...

With that in place, let's take a look at the logic we are running.

A neat way to "do the same thing" over a set of different things is to utilize the matrix feature of GitHub Actions (docs here). We can define a list of environmentss (docs here) to run our workflow on, and then use that list to run the same steps for each environment.

This is what the strategy section does, and it then feeds this into the environment which tells GitHub Actions which environment variables and secrets are available, as well as automatically tracks our deployments in the GitHub UI:

1 # ...

2 jobs:

3 bootstrap:

4 name: bootstrap

5 runs-on: ubuntu-latest

6 strategy:

7 fail-fast: false

8 matrix:

9 # Add new environments to this list to run bootstrap on them when manually triggered.

10 environment:

11 - "Integration Test"

12 - "Production Single-tenant"

13 - "Production Multi-tenant"

14 environment: ${{ matrix.environment }}

15 # ...

Now, what would happen if we ran this multiple times in parallel on the same environment? Probably not something we'd like to find out.

To prevent this, we can tell GitHub to only allow one job to run at a time, given a group identifier. We do this by adding a concurrency control to our workflow (docs here):

1 # ...

2 # Limit to only one concurrent deployment per environment.

3 concurrency:

4 group: ${{ matrix.environment }}

5 cancel-in-progress: false

6 # ...

And finally, we get to the actual steps of logic we are performing. First we'll checkout our code, set up bun, and then use bun to install our dependencies:

1 # ...

2 steps:

3 - uses: actions/checkout@v3

4 - uses: oven-sh/setup-bun@v1

5

6 - name: Install dependencies

7 working-directory: ./deployment

8 run: bun install

9 # ...

Now we're ready to bootstrap! We use the variables and secrets we defined previously. Since we told GitHub which environment we are running in, it will automatically know where to pull this from. This saves us the headache of weird hacks such as AWS_ACCESS_KEY_ID_FOR_INTEGRATION_TEST or something similar.

We pull in what we need, and then run the cdk bootstrap command via bun:

1 # ...

2 - name: Bootstrap account

3 working-directory: ./deployment

4 env:

5 AWS_REGION: ${{ vars.AWS_REGION }}

6 AWS_DEFAULT_REGION: ${{ vars.AWS_REGION }}

7 AWS_ACCESS_KEY_ID: ${{ secrets.AWS_ACCESS_KEY_ID }}

8 AWS_SECRET_ACCESS_KEY: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

9 run: bun run cdk bootstrap

Deployment Workflow

Our deployment flow gets a bit more complicated. We're building for the future here, so we want to put in place a few checks to make sure we don't accidentally deploy something broken. Or, if we do, we can at least stop early before it affects our users.

We will be building up the following flow, illustrated in the diagram below:

This is what is called a "staggered deployment", but across our environments:

- We roll out to our

Integration Testenvironment in Stage 1.- We validate that our IaC actually works.

- Once the deployment is done, we perform checks against it to validate the health of the deployment (e.g. End-to-End tests, smoke tests, etc)

- We validate that our application actually works with our changes to our infrastructure.

- If everything looks good, we proceed to Stage 2 which deploys both our

Production Single-tenantandProduction Multi-tenantenvironments in parallel. - We do a final check that all is good, and then we're done!

This helps us build confidence that our deployments work, since our aim is to deploy changes immediately as they are merged into our main branch.

Part 1: Structuring the deployment flow

Let's take a look at how we do this. First, we'll set up our triggers. We want to both allow manually triggering a deployment, again via workflow_dispatch, but we also want to immediately deploy when changes are merged to our main branch:

1 name: "Deployment: Deploy to AWS"

2

3 on:

4 workflow_dispatch:

5 push:

6 branches:

7 - main

8 # ...

All good and well, so let's start defining our jobs. Ignore the uses for now, that's a reuseable workflow which we'll get back to later in Part 2 of this section:

1 # ...

2 jobs:

3 # Stage 1 tests that the deployment is working correctly.

4 stage-1:

5 name: "Stage 1"

6 uses: ./.github/workflows/wf-deploy.yml

7 with:

8 environment: "Integration Test"

9 secrets: inherit

10 # ...

We first initiate our Stage 1 deployment, specifying that it's the Integration Test environment. We also allow the the reuseable workflow (defined in wf-deploy.yml) to inherit all secrets from the caller.

Next, we want to run our checks, but only after our Stage 1 job has finished running successfully. To do this we use needs to define a dependency on a previous job (docs here).

1 # ...

2 # Run tests against the environments from Stage 1.

3 stage-1-check:

4 name: "Stage 1: Check"

5 needs:

6 runs-on: ubuntu-latest

7 steps:

8 - name: "Check"

9 run: |

10 echo "Checking 'Integration Test'..."

11 # Run tests against the environment...

12 # Or alert/rollback if anything is wrong.

13 # ...

We aren't doing much interesting for now in our test job, since we are only deploying a Domain, but this will be helpful later on when we start setting up our Frontend and APIs.

Similarly, we use needs again to specify how we move into Stage 2. We first set up Production Single-tenant:

1 # ...

2 # Stage 2 is our more critical environments, and only proceeds if prior stages succeed.

3 stage-2-single:

4 name: "Stage 2: Single-tenant"

5 needs:

6 uses: ./.github/workflows/wf-deploy.yml

7 with:

8 environment: "Production Single-tenant"

9 secrets: inherit

10

11 stage-2-single-check:

12 name: "Stage 2: Check Single-tenant"

13 needs:

14 runs-on: ubuntu-latest

15 steps:

16 - name: "Check"

17 run: |

18 echo "Checking 'Production Single-tenant'..."

19 # Run tests against the environment...

20 # Or alert/rollback if anything is wrong.

21 # ...

And do the same for our Production Multi-tenant environment:

1 # ...

2 stage-2-multi:

3 name: "Stage 2: Multi-tenant"

4 needs:

5 uses: ./.github/workflows/wf-deploy.yml

6 with:

7 environment: "Production Multi-tenant"

8 secrets: inherit

9

10 stage-2-multi-check:

11 name: "Stage 2: Check Multi-tenant"

12 needs:

13 runs-on: ubuntu-latest

14 steps:

15 - name: "Check"

16 run: |

17 echo "Checking 'Production Multi-tenant'..."

18 # Run tests against the environment...

19 # Or alert/rollback if anything is wrong.

20 # ...

We could have been using using build matrix's again, but that would mean that the checks for Stage 2 would only proceed after both of the Jobs completed. We would prefer that they check immediately once the deployment is done, so instead we split up these two into their own Jobs manually.

Voila 🎉 We've now set the skeleton for the deployment flow we pictured earlier:

Part 2: Reuseable workflow

To better reuse logic that is identical from different jobs or even different workflows and repositories, GitHub supports making workflows reuseable (docs here).

We've done this in our wf-deploy.yml workflow, which we use in our stage-1, stage-2-single, and stage-2-multi jobs. Let's take a look at what it does.

First, we will need to define which inputs this workflow takes. Remember the with and secrets that we used earlier? That's how we pass information to our resuseable workflow. We define these in the inputs section:

1 name: Deploy

2

3 on:

4 workflow_call:

5 inputs:

6 environment:

7 required: true

8 type: string

9 # ...

Here we simply specify that we require an environment to be passed along. We will automatically inherit the secrets, but we would otherwise specify those explicitly as well.

We can now proceed to the logic, which resembles the cd-bootstrap workflow we looked at earlier. We first set up our environment, concurrency group, and then install our dependencies:

1 # ...

2 jobs:

3 deploy:

4 name: "Deploy"

5 runs-on: ubuntu-latest

6 environment: ${{ inputs.environment }}

7 # Limit to only one concurrent deployment per environment.

8 concurrency:

9 group: ${{ inputs.environment }}

10 cancel-in-progress: false

11 steps:

12 - uses: actions/checkout@v3

13 - uses: oven-sh/setup-bun@v1

14

15 - name: Install dependencies

16 working-directory: ./deployment

17 run: bun install

18 # ...

Before we proceed to actually deploying anything, we want to sanity check that our deployment looks valid. We do this by trying to first synthesize the whole deployment (some info on synth here), and then run whatever test suite we have:

1 # ...

2 - name: Synthesize the whole stack

3 working-directory: ./deployment

4 env:

5 DOMAIN: ${{ vars.DOMAIN }}

6 AWS_REGION: ${{ vars.AWS_REGION }}

7 AWS_DEFAULT_REGION: ${{ vars.AWS_REGION }}

8 AWS_ACCESS_KEY_ID: ${{ secrets.AWS_ACCESS_KEY_ID }}

9 AWS_SECRET_ACCESS_KEY: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

10 run: bun run cdk synth --all

11

12 - name: Run tests

13 working-directory: ./deployment

14 env:

15 DOMAIN: ${{ vars.DOMAIN }}

16 AWS_REGION: ${{ vars.AWS_REGION }}

17 AWS_DEFAULT_REGION: ${{ vars.AWS_REGION }}

18 run: bun test

19 # ...

Everything should now be good, so let's run our actual deployment:

1 # ...

2 - name: Deploy to ${{ inputs.environment }}

3 working-directory: ./deployment

4 env:

5 DOMAIN: ${{ vars.DOMAIN }}

6 AWS_REGION: ${{ vars.AWS_REGION }}

7 AWS_DEFAULT_REGION: ${{ vars.AWS_REGION }}

8 AWS_ACCESS_KEY_ID: ${{ secrets.AWS_ACCESS_KEY_ID }}

9 AWS_SECRET_ACCESS_KEY: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

10 run: bun run cdk deploy --concurrency 4 --all --require-approval never

And there we go! We've now automated our deployment flow, and no longer have to worry about manually deploying things to our environments.

Trigger the Workflows

Push your project to GitHub. You now have access to the workflows and can trigger them manually.

If you haven't done it already, let's run the Deployment: Bootstrap workflow first, to set up CDK on all accounts. Alternatively, jump to the section Manual alternative: Bootstrapping our Accounts for how to do this manually.

Next up, before we initiate the deployment it's recommended to be logged into your Domain Registrar that controls the DNS of your domain, so that you can quickly update your name servers to point to the Hosted Zone that we will be creating. This is necessary to DNS validate our ACM certificates.

Our process will go:

- Open the DNS settings of your domain registrar

- Trigger the

Deployment: Deploy to AWSworkflow to start the deployments - Log into the target AWS Account and go to the AWS Console -> Route 53 and select Hosted Zones

- Wait for the Hosted Zone to be created, refresh the list, go into the Hosted Zone and copy the name servers, which will look something like this:

ns-1234.awsdns-99.co.uk. ns-332.awsdns-21.net. ns-6821.awsdns-01.org. ns-412.awsdns-01.com. - Update the name servers in your domain registrar to point your chosen domain to the Name Servers

- Repeat step 3., 4., and 5. for each environment

This can easily take 5-15 minutes to complete the first time, depending on how quick you are at setting up your Name Servers.

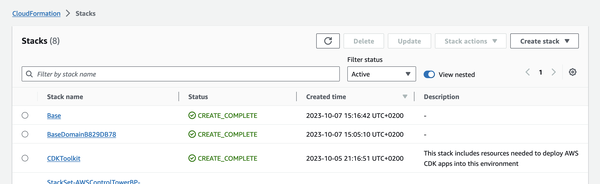

You can go and see the generated CloudFormation stacks in the AWS Console -> CloudFormation which will look something like this:

We've now set up the foundation for all of our future deployments of applications and services 🥳

Manual alternative: Bootstrapping our Accounts

Once you've cloned the GitHub repository (or made your own version of it), set up bun:

|

Then we can install all of our dependencies for our CDK stack:

We’ll be setting up CDK on each of our accounts, so that we can start using it for deployments.

Assuming that you have already switched your CLI environment to point to the AWS account that you want to bootstrap:

# Assuming we are still in the deployment folder

This is essentially what the cd-bootstrap workflow does for you, across all the environments you've specified (you can adjust the list in the build matrix).

Manual alternative: Deployments

Now that we have bootstrapped our accounts, we can deploy our CDK stacks.

Similar to using the Workflow: Before we initiate the deployment, it's recommended to be logged into your Domain Registrar that controls the DNS of your domain, so that you can quickly update your name servers to point to the Hosted Zone that we will be creating. This is necessary to DNS validate our ACM certificates.

Our process will go:

- Open the DNS settings of your domain registrar

- Log into the target AWS Account and go to Route 53 -> Hosted Zones

- Start the deployment

- Wait for the Hosted Zone to be created, refresh the list, go into the Hosted Zone and copy the name servers

- Update the name servers in your domain registrar to point your chosen domain to the Name Servers

Assuming you are ready for step 3., we can start the deployment. We'll assume that you are still in the deployment folder and that you have switched your CLI environment to point to the AWS account that you want to deploy to:

The DOMAIN environment variable is required here, since we need to know what domain we should use for the Hosted Zone.

Bonus: Justfile and just

It might seem overkill right now, but we will eventually have many different commands across many folder locations in our mono-repo setup. To make this a bit easier to work with, we can use the tool Just to help us out.

From just's README:

justis a handy way to save and run project-specific commands

From the installation instructions we can install it via:

# macOS:

# Linux with prebuilt-mpr (https://docs.makedeb.org/prebuilt-mpr/getting-started/#setting-up-the-repository):

# Prebuilt binaries (assuming $HOME/.local/bin is in your $PATH):

|

This allows us to set up a justfile in the root of our project, which we can then use to define shortcuts to our commands. For example, we can define a shortcut to run our CDK commands:

1 # Display help information.

2 :

3 4 5 # Setup dependencies and tooling for <project>, e.g. `just setup deployment`.

6 :

7 8 9 :

10 11 12 13 14 15 # Deploy the specified <stack>, e.g. `just deploy 'Global/**'`, defaulting to --all.

16 :

17 18 19 20 21 22 # Run tests for <project>, e.g. `just test deployment`.

23 :

24 25 26 :

27 28 29 30 31 32 :

33 34 35 36

We can now run our commands via just:

# Setup our dependencies:

# Run tests:

# Synthesize our CDK stack:

# Deploy our CDK stack:

Next Steps

Next up is to add our first Frontend! Follow along in Part 3 of the series.